To get the most out of Google Search Console, you need a good understanding of what the different index statuses mean. Google’s documentation about GSC is quite dense and a pretty dry read. We hope this article does a better job of explaining Google’s indexing terms succinctly and clearly.

The statuses we’ll go over today include:

- Alternate Page With Proper Canonical Tag

- Crawled – Currently Not Indexed

- Page With Redirect

- Blocked by Robots.txt

- Excluded by ‘noindex’ Tag

- Blocked Due to Access Forbidden (403)

- Duplicate, Google Chose Different Canonical Than User

- Duplicate Without User-Selected Canonical

- Discovered – Currently Not Indexed

- Not Found (404)

- Soft 404

- URL blocked Due to Other 4xx Issue

- Server error (5xx)

Google Search Console

GLOSSARYGoogle Search Console (GSC) is a free tool from Google that allows you to take your site’s presence on Google Search. It provides valuable data on how Google views your site, including information on search traffic, mobile usability, and issues that might affect your site’s on-page SEO and performance in search results. GSC also offers performance tracking metrics, like the amount of traffic to your website, impressions, click-through rate, and your average search position.

You can also use GSC to submit your sitemap to Google, which helps Google understand the hierarchy of pages on your site and rank it accordingly.

See also: Indexing, Deindexing, Crawling

Further reading: The Best Free Tools for Checking Website Traffic and Best Free Tools We Use

Why checking index status is important

You need to monitor your website’s index status regularly to maintain your presence on search. If Google hasn’t indexed your pages properly, they won’t appear in search results, no matter how much time you’ve spent on SEO.

Spot Google search results indexing issues early

Checking your index status helps you identify problems before they seriously impact your traffic. When Google can’t index your pages correctly, potential customers can’t find you. Regular checks mean you can spot and fix these issues quickly, minimising any negative effect on your business.

Indexing

GLOSSARYIndexing is when Google crawls a page, processes the content and stores it in its database. This is also when Google decides if a page is eligible and valuable enough to be listed in search results. While it’s unrealistic to expect every single one of your web pages to be indexed on Google, it’s important to check that your most important web pages are indexed by checking Google Search Console.

See also: Mobile-first indexing, Canonical tag, Crawl budget, Google Search Console

While it’s unrealistic to expect every single one of your web pages to be indexed on Google, it’s important to check that your most important web pages are indexed by checking Google Search Console. This tool allows you to check whether your pages are indexed or not and submit re-indexing requests if you think the Google algorithm and its crawl bots have made a mistake.

Track your website’s growth

Index reports give you insight into how your website is growing and changing over time. You can see how quickly Google crawls and processes new content, helping you understand if your site architecture supports efficient crawling.

Crawling

GLOSSARYCrawling is the systematic process search engine bots use to ‘read’ web pages and gather information about the content, structure and internal linking.

Search engines like Google then use this information to decide whether a web page should be indexed in search results and where it ranks. Websites with crawl-friendly structures and well-optimised content are more likely to be indexed and rank highly in search engine results.

See also: Google Search Console, Sitemaps, Indexing

Identify technical SEO problems

A lot of indexing issues come from technical problems you might not notice when browsing your own site as a visitor. These could include:

- Robots.txt errors blocking important pages

- Canonical issues creating duplicate content

- Server issues making pages inaccessible to Google

- Mobile usability issues affecting how Google indexes your site

Finding these problems early through index status reports lets you fix them before they harm your rankings.

Improve crawl budget efficiency

Google allocates a certain amount of resources to each website. This is known as a ‘crawl budget’. By checking which pages are being indexed and which aren’t, you can make informed decisions about how to use this budget effectively. This might mean removing low-value pages, improving internal linking, or updating your XML sitemap.

Crawl budget

GLOSSARYCrawl budget refers to the number of pages a search engine will crawl on your website within a given time. Search engines allocate more crawl budget to larger, more authoritative sites that update frequently. By optimising your crawl budget through efficient site structure, removing low-value pages, and fixing broken links, you help search engines find and index your most important content.

See also: Indexing, Crawling, Content pruning

Confirm your website changes work

After making major changes to your website, like redesigning your site structure, implementing redirects, or launching new sections, index status reports help you confirm whether Google has properly processed these changes.

What is the page indexing report?

The page indexing report, formerly known as the index coverage report, is a tool in GSC that tells you which of your pages aren’t indexed in Google search results and why. It looks like this:

Reasons for unindexed pages

Underneath the graph of indexed vs non-indexed pages, you can see why Google hasn’t indexed certain pages. Understanding what these phrases mean is essential to fixing any underlying issues.

Disclaimer on indexing statuses

There is no comprehensive, reliable, or up-to-date list of all possible index statuses available online. We only have access to the list of indexing statuses we can see on the sites we manage and the ones we can verify as current and factual from other sites.

If you see an error in your Google Search Console page indexing report that we haven’t mentioned, let us know. We’ll research it and add it to this list.

Alright, that disclaimer out of the way, let’s get into what Google says about unindexed pages, what it means in plain English, and how to fix it:

Alternate page with proper canonical tag

Starting off with a relatively simple one. ‘Alternate page with canonical tag’ means that your website has duplicate pages. The one that has a canonical tag is indexed on Google.

Canonical tag

GLOSSARYA canonical tag is a piece of HTML code that tells search engines which version of a page is the main one when similar content exists in multiple places. This helps you avoid duplicate content issues and directs your SEO value to your preferred page. It’s like telling Google ‘This is the original page that I want you to show in search results’.

See also: Indexing, Duplicate, Google Search Console

Further reading: What Do the Different Google Search Console Index Statuses Mean?

Should you ignore ‘Alternate page with proper canonical tag’, or troubleshoot?

You should check that the page with the tag is the one you want to show on Google, and make sure you don’t want any of the pages in this group indexed on Google Search Results. If so, you can safely ignore this index status. It indicates that Google is showing the canonical page in search results, which is a good thing!

Crawled – currently not indexed

‘Crawled – not currently indexed’ means Google has found and read your page but decided not to add it to its search index yet. This status can be frustrating because Google acknowledges your page exists but chooses not to show it in search results, and you just don’t know why.

Google might not index your page for a few reasons:

- Your content is too similar to other pages

- Google thinks site-wide quality is too low

- The page has thin content with little value

- Your site has too many pages competing for Google’s crawl budget

- Google has temporarily placed the page in a queue to index later

Thin content

GLOSSARYThin content refers to web pages that provide little useful information or value to users. This can include pages with minimal text, duplicate content, or low-quality articles that don’t provide any answers or insights. Search engines may rank these pages lower because they don’t meet user needs. To improve SEO, it’s important to create high-quality, informative content that engages visitors and keeps them on the page longer.

See also: Scaled content abuse, Content audit

Should you ignore ‘crawled – currently not indexed’, or troubleshoot?

This index status doesn’t always mean there’s a problem. New websites or pages often stay in this limbo state for a while as Google evaluates them. However, if important pages stay in this state for more than a few weeks, you should take action.

To improve your chances of getting these pages indexed:

- Add more unique, helpful content to the page

- Improve internal linking to these pages from your indexed content

- Check your page loads properly and quickly

- Make sure you haven’t accidentally blocked indexing with noindex tags

Internal links

GLOSSARYInternal links are connections between pages on your website. They help search engines understand which pages are most important and how your content relates to each other. For example, if multiple pages link to your services page, search engines see it as valuable content. Internal links also guide visitors through your website, encouraging them to explore more pages.

See also: External links

Page with redirect

When Google shows ‘Page with redirect’ in your index report, it means it found a non-canonical URL that redirects to another page. Google won’t include redirected URLs in search results because it prioritises showing the final destination page instead.

Redirects are a normal part of website management. You’ll often use them when:

- You’ve moved content to a new URL

- You’ve consolidated similar pages

- You’ve switched from HTTP to HTTPS

- You’re directing multiple domain names to one website

Should you ignore ‘page with redirect’, or troubleshoot?

This status usually isn’t a problem. It helps Google understand your site structure and follow your preferred paths through your website.

However, watch out for these common redirect issues:

- Redirect chains (where page A redirects to page B, which redirects to page C)

- Redirect loops (where pages redirect in a circle)

- Temporary (302) redirects used for permanent changes

To check your redirects, use a browser extension like Redirect Path or an online redirect checker like https.io. Make sure all redirects point directly to their final destination using the appropriate redirect type (301 for permanent, 302 for temporary).

Blocked by robots.txt

When you see ‘Blocked by robots.txt’ in your index report, it means Google found links to these pages but couldn’t crawl them because your robots.txt file blocked access.

Robots.txt files work like a set of instructions telling search engines which parts of your site they can and cannot visit. This is what it looks like in practice:

User Agent: ChatGPT-User

Disallow: /

Should you ignore ‘blocked by robots.txt’, or troubleshoot?

Unlike other indexing issues, ‘blocked by robots.txt’ often indicates an intentional choice. Common pages you might deliberately block include:

- Admin sections

- User account areas

- Shopping cart pages

- Staging environments

However, blocking pages through robots.txt doesn’t remove them from search results entirely. Google might still index the URL (without content) if enough sites link to it. If you don’t want a page to be indexed, you need to use the ‘noindex’ tag instead.

To check if you’re accidentally blocking important content:

- Go to your website’s robots.txt file (yourwebsite.com.au/robots.txt)

- Look for ‘Disallow:’ directives

- Make sure they don’t include paths to pages you want indexed

If you find valuable content that’s blocked by Robots.txt and shouldn’t be, edit your robots.txt file to grant access to Google.

Excluded by ‘noindex’ tag

When your index report shows ‘URL marked ‘noindex’, it means Google found and crawled the page but won’t include it in search results because you’ve added a noindex tag. This tag explicitly informs Google not to show the page, even if they can access and read it.

Unlike robots.txt, which prevents pages from being crawled, noindex allows your page to be crawled but prevents indexing. You’ll typically add noindex to pages like:

- Thank you pages

- Login pages

- Internal search results

- Duplicate content pages

- Thin content pages that you still want users to access

Should you ignore ‘excluded by ‘noindex tag’, or troubleshoot?

This status usually indicates a deliberate choice rather than an error. However, check for these common mistakes:

- Noindex tags accidentally left on important pages

- Development or staging sites that moved to production with noindex still active

- Template settings that apply noindex too broadly

To fix accidental noindex issues, just remove the tag and wait for Google to recrawl the page.

Blocked due to access forbidden (403)

A blocked due to access forbidden (403) error on Google Search Console means Google tried to crawl a page on your site but was denied access.

Should you ignore ‘blocked due to access forbidden (403)’, or troubleshoot?

Unlike intentional blocking methods like robots.txt, 403 errors might be configuration issues rather than deliberate choices. Common causes include:

- Server security settings that block Google’s IP addresses

- Password-protected content without proper access provisions

- Overly restrictive firewall rules

- Misconfigured permission settings on files or directories

To check if a page is returning a 403 error:

- Visit the URL directly in your browser

- Use online tools like HTTP Status Code Checker

- Check your server logs for 403 responses to Googlebot

To fix 403 errors affecting pages you want indexed, you need to talk to your web hosting provider. They should:

- Update your server security rules to allow Googlebot

- Check file and directory permissions

- Configure your firewall to recognise search engine crawlers

Duplicate, Google chose different canonical than user

When you see ‘Duplicate, Google chose different canonical than user’ in your index report, it means Google has ignored your canonical suggestions and chosen a different URL as the main version of the page. This happens when Google thinks another page better represents the content.

Ultimately, Google takes canonical tags as suggestions, not directives. Google can override them when it finds contradicting signals like:

- Internal links pointing predominantly to a different version

- External links favouring another URL

- Redirects that conflict with your tags

- Stronger content on a similar page

- Inconsistent canonical tags across similar pages

Should you ignore ‘duplicate, Google chose different canonical than user’ or troubleshoot?

This status doesn’t always mean something is wrong, but it does indicate that Google disagrees with your preferred URL structure. To address this issue, you need to:

- Check for mixed signals across your site

- Make sure all versions of similar content consistently reference the same canonical URL

- Align your internal linking with your canonical preferences

- Consider using 301 redirects instead of relying solely on tags

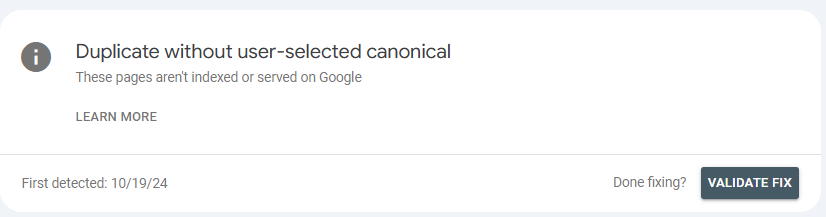

Duplicate without user-selected canonical

‘Duplicate Without User-Selected Canonical’ means that you have two or more similar pages and haven’t chosen one as canonical, so Google automatically chooses which one to index.

Should you ignore ‘duplicate without user-selected canonical’, or troubleshoot?

You don’t want Google to make this decision for you, since it might not choose the right canonical version to index. You need to mark the most high-value page as canonical.

If you want multiple similar pages indexed, you need to further differentiate the pages from each other. Make sure the title tags, URL, metadescription and content are different enough from each other so that Google and users will be able to easily tell them apart.

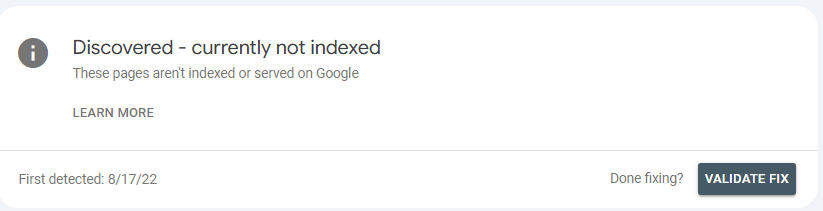

Discovered – currently not indexed

When your index report shows ‘discovered – currently not indexed’, it means Google knows your page exists but hasn’t crawled it yet.

This differs from ‘crawled – not currently indexed’ because Google hasn’t even read the content yet. It’s simply aware of the URL through links.

This status commonly appears for:

- New pages Google hasn’t prioritised for crawling yet

- Pages deep within your site architecture

- Pages with few internal links pointing to them

- Content on new websites that are still building authority

- Pages on sites with crawl budget limitations

Google uses its crawl budget selectively, focusing on pages it considers most valuable. When Google discovers more URLs than it can crawl, it creates a queue and prioritises based on perceived importance.

Should you ignore ‘discovered – not currently indexed’, or troubleshoot?

Unlike some other indexing statuses, this one often resolves itself over time as Google continues to crawl your site.

However, if you have high-value pages that have had this index status for a long time, you should start to worry. Google might not consider them valuable enough to index.

To improve your chances of getting these pages crawled:

- Add more internal links to these pages from your important content

- Include the pages in your XML sitemap

- Improve page quality and uniqueness

- Share the URLs on social media or other external platforms

- Request indexing through Google Search Console (for your most important pages only)

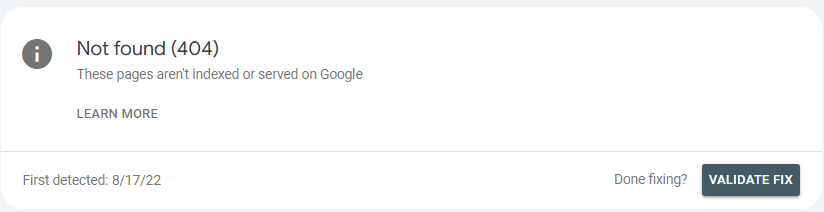

Not gound (404)

When your index report shows ‘not found (404)’, it means Google tried to access a URL but your server responded with a 404 error, indicating the page doesn’t exist.

Common causes of 404 errors include:

- Pages you’ve deleted without setting up redirects

- URLs with typos or formatting errors

- Changed URL structures after a website redesign

- Content that moved to a new location

- Links pointing to pages that never existed

Should you ignore ‘not found (404)’, or troubleshoot?

Unlike other indexing issues, 404 errors need different approaches depending on the situation. For pages you meant to remove, you should let Google naturally drop them from the index over time or use Google Search Console’s URL removal tool for faster results.

For pages that should exist, you should:

- Restore the missing content

- Fix any broken URLs

- Check your CMS settings for accidental unpublishing

For pages that moved elsewhere, you should set up 301 redirects to point to the new locations and update internal links to prevent 404s in the first place.

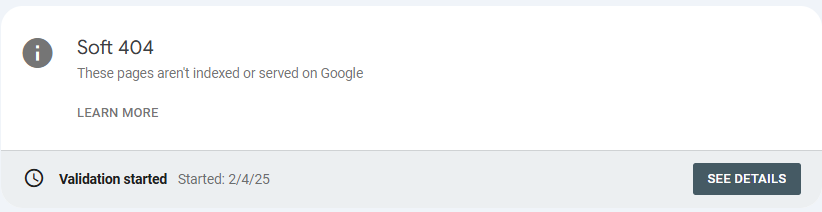

Soft 404

When your index report shows ‘soft 404’, it means Google believes your page is an error page, even though your server returned a normal 200 (success) status code instead of a proper 404 error.

Why Google flags pages as soft 404s

Google typically identifies soft 404s when pages:

- Contain phrases like “not found”, “error”, or “no results”

- Return empty or extremely thin content

- Show a generic ‘no products found’ message or search results with zero matches

- Redirect to your homepage without explaining why the original content is missing

Unlike proper 404 errors that clearly tell both users and search engines a page doesn’t exist, soft 404s send mixed signals that can confuse Google.

How to fix soft 404 issues

For pages that genuinely don’t exist, configure your server to return 404 status codes rather than 200 codes with error messages. This sends clear signals to Google about the status of these pages.

For thin content pages you want to keep, improve them by adding a substantial amount of helpful content. Make sure search results pages with no matches offer helpful suggestions or alternative products rather than just empty results.

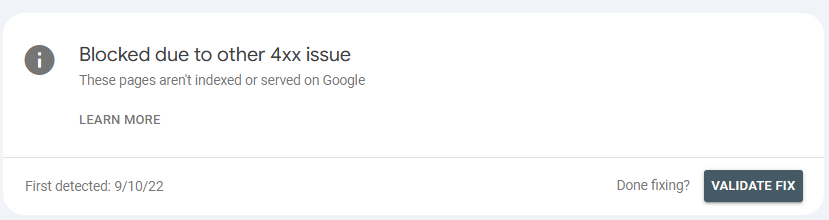

URL blocked due to other 4xx issue

When you see ‘URL blocked due to other 4xx issue’ in your index report, it means Google tried to access the page but encountered a client error response code other than 404. These 4xx errors tell visitors and search engines that the requested resource can’t be accessed due to a client-side problem.

Common 4xx errors besides 404 include:

- 401 (Unauthorised): The page requires login credentials

- 403 (Forbidden): The server refuses to grant access

- 410 (Gone): The page has been permanently removed

- 429 (Too Many Requests): Rate limiting has been triggered

Should you ignore ‘URL blocked due to other 4xx issue’, or troubleshoot?

Unlike 404 errors, which just mean a page wasn’t found, these other 4xx errors often indicate configuration issues with your website that need attention. They can prevent Google from indexing valuable content and frustrate users.

To fix these issues:

- Check server access permissions for 401/403 errors

- Check your rate-limiting settings if you see 429 errors

- Check authentication is working correctly

- Check important pages don’t accidentally require login

Use browser developer tools or online HTTP status checkers to identify exactly which 4xx error is occurring, because Google won’t tell you. Once you know the specific error, you can take targeted steps to resolve it.

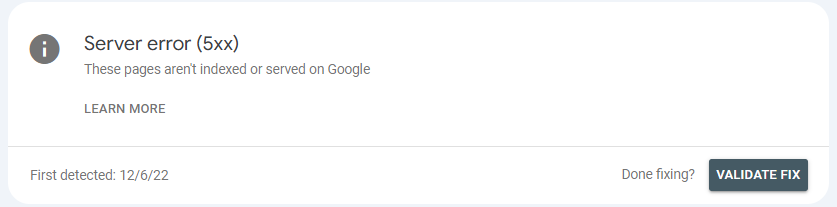

Server error (5xx)

When your index report shows ‘server error (5xx)’, it means Google tried to access your page but your server failed to process the request properly.

The most common server errors include 500 (Internal Server Error), 503 (Service Unavailable), and 504 (Gateway Timeout). Each one indicates a different problem with your server’s ability to handle requests.

These errors typically stem from:

- Server resource limitations

- PHP or database errors,

- Plugin conflicts

- Server configuration issues

- Temporary hosting problems

They’re often intermittent, making them frustrating to diagnose.

Should you ignore ‘server error (5xx)’, or troubleshoot?

Don’t ignore 5xx errors in your indexing reports. Google will eventually stop trying to crawl pages that consistently return these error codes, severely handicapping your ability to show up in search.

Check your server logs to identify the specific error pattern, then test the affected URLs in your browser to see if the problem persists or only occurs for search engine crawlers.

If you can’t resolve the issue yourself, consult your web developer or hosting provider. They can help identify resource constraints or code issues causing the problems.

Using the URL inspection tool in Google Search Console

The URL Inspection tool in Google Search Console gives you detailed information about specific URLs on your site. It shows you exactly how Google views your pages and helps diagnose problems that might prevent proper indexing.

How to access the URL inspection tool

You’ll find the URL Inspection tool at the top of the Google Search Console interface. Enter the full URL you want to check into the search bar and click ‘Enter’. The tool immediately retrieves Google’s current information about that page.

What you can learn from the URL inspection tool

The URL inspection tool shows information like:

- Whether Google has indexed the page

- Any indexing or crawling errors Google encountered

- How the page appears in Google’s index

- When Google last crawled the page

- The canonical URL Google recognises for the page

You can also view the rendered version of your page as Googlebot sees it by clicking ‘View tested page’. This can help identify content that might be hidden from search engines because of JavaScript issues or mobile usability problems. You can also see how Google views a page in real-time by clicking ‘Test live URL’.

Taking action on indexing problems

For pages with indexing problems, you can request indexing directly through the tool. This feature is useful when you’ve fixed issues on critical pages and want Google to recrawl them quickly.

It’s important to note that Google limits how many URLs you can submit for indexing each day, so use this function selectively for your most important pages rather than submitting your entire site.

What percentage of your pages should be indexed?

Don’t worry about the ‘right’ percentage of pages Google should index. The truth is, there’s no magic number that applies to every site.

Rather than aiming for 100% indexing, which isn’t the case on any site, focus on having your most valuable pages indexed. Not every page on your website deserves to appear in search results. Admin pages or ones with thin content don’t serve any benefit to users on search and don’t need to be indexed.

Percentage of indexed pages varies from site to site

Some sites will naturally have a lot of pages that aren’t eligible for indexing. For example, eCommerce sites often have lower indexing rates because of:

- Filter pages creating multiple URL variations

- Product sorting pages with no unique content

- Out-of-stock product pages that may be temporarily de-indexed on purpose

Content-focused sites made up of mostly blog posts typically have higher indexing rates since most pages contain valuable information meant for public consumption.

Monitoring changes over time

Rather than fixating on a specific percentage, watch for sudden drops in your indexing rate. A sharp decline might be an indicator of technical issues that need your attention, while a gradual increase often shows Google finds more of your content valuable.

The most important question isn’t ‘how many’ but ‘which’ pages are indexed. Make sure your revenue-generating pages appear in Google’s index. These are the ones that truly matter to your business.

How long does Google’s indexing process take?

To use the favourite phrase of every SEO expert: It depends. Google’s indexing timeline varies based on a lot of factors, and there’s no guaranteed timeframe for when your pages will appear in search results.

For established websites with high authority, Google often crawls new pages within days and indexing might happen within a week.

For new websites or those with lower authority, the process can take much longer. It can take weeks or even months for Google to regularly crawl and index content.

Domain Authority (DA)

GLOSSARYDomain Authority (DA) is a score developed by the SEO software company Moz to predict how likely a website is to rank on Search Engine Results Pages (SERPs). It’s based on a scale from 1 to 100, with higher scores indicating a greater ability to rank. The authority score takes into account the quality and quantity of backlinks, the age and history of the website’s domain name, and the quality of the content.

See also: Search Engine Results Pages (SERPs), Backlinks, Ranking factors

Further reading: Best Free SEO Tools We Use

Does ‘validate fix’ or ‘request indexing’ work?

Google Search Console offers two options that promise faster attention to your pages: ‘Validate Fix’ for resolving errors and ‘Request Indexing’ for getting pages in SERPs. But how well do they actually work?

Request indexing

The ‘Request Indexing’ feature does work, but with limitations:

- It places your URL in a priority crawl queue

- Google typically recrawls the page within a few days

- It doesn’t guarantee indexing, only recrawling

Even after requesting indexing, Google might still decide not to index your page if it finds quality issues or other problems. Think of this tool as asking Google to take another look, not demanding inclusion in search results.

Validate fix

When you fix issues reported in Google Search Console, the ‘Validate Fix’ button helps confirm your changes worked. It tells Google to check a sample of affected URLs, and then Google recrawls these pages to see if you’ve fixed the reported issues. The process can take days or weeks, depending on how many URLs need checking.

These tools work best when you’ve made real improvements to your pages. They can speed up the process of getting Google to notice your changes, but they can’t override Google’s fundamental quality standards.

You should use these options sparingly and only focus on your most important pages. Overusing them won’t help since Google limits how many URLs you can submit this way.